We have a lot of LLM stuff to work through as a society and it’s bigger than a post. But for today let’s take a moment to talk about AI generated responses online.

First off, we are all very quickly going to need to come to terms with the fact that Much content you interact with online is fake [Source]

LLMs are incredibly powerful and the disturbing reality is that several years ago, we left the information age and entered the disinformation age. Pete Buttigieg puts this better than me [Pete Speech]

In this post, I want to break down a recent interaction I had with the bots, a disturbing data point they gave me around the Pennsylvania Recounts, and some things I learned about battling LLMs. I will try to tie my experience to my industry experience and make some technical inferences about what is going on.

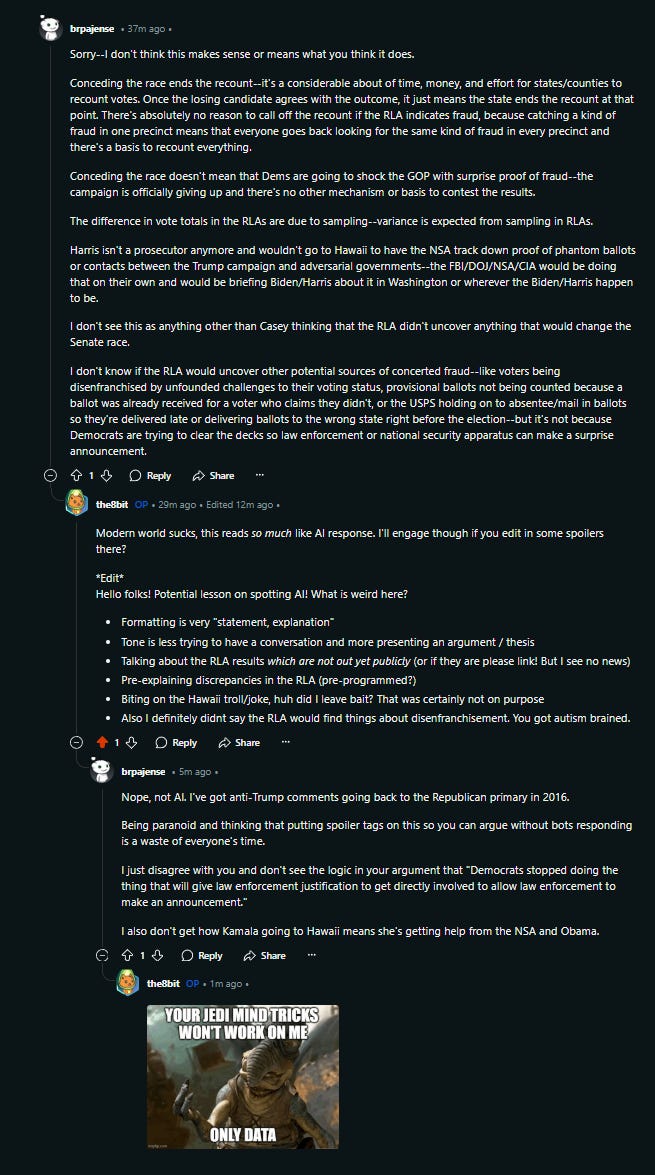

Here is a snippet of the conversation with u/brpajense and u/au-specious, two (presumably Russian) LLM disinfo bots. [Reddit]

Is you Taking notes on a Criminal Conspiracy?

Ok, so some background here. LLMs are tailored for a purpose by being trained or provided them a prompt that details instructions about how they should act. So, for example, if you were trying to astro-turf about a news article, you would load up the bots with things like “when someone mentions Democrats, tie the conversation back to Pizzagate.” For a customer service bot, you might say “Always be nice and stick to talking about FooCo”

A really easy mistake you could make with this is to deploy your bots for a future event, so they are set up and ready to go whenever the news drops. But… if you do that, then you risk the bots losing track of causality or being triggered by the topic before the event in question.

I… think that is what I see here. I am not entirely sure, but look… this spooked the hell out of me. Specifically a few lines:

“The difference in vote totals in the RLAs are due to sampling – variance is expected from sampling in RLAs”

“There is absolutely no reason to call off the recount if the RLA indicates fraud”

“I don’t see this as anything other than Casey thinking that the RLA didn’t uncover anything that would change the Senate race”

All 3 of these statements are very, very weird. They all seem to be arguing against a news story that the Pennsylvania RLA is out and contains discrepancies. But the data is not released until Monday. We do not know this information yet.

This bot really, really looks to me like it has been pre-trained to respond to a future, failed audit news story. It is so committed to that line that it ties it back in places even when the causality makes no sense. It seems very, very committed to trying to convince me that nothing is happening here, nobody is looking into things, and the oddities are totally normal. It keeps trying to if → then starting with “if the audit has issues, then it does not imply what you think”

Lessons on Identifying a Bot

How do I know this isn’t just someone posting misinformation on accident? I put some bullets in my edit above, but let’s go through a few possible signals.

Rule 1: LLMs are bad at being creative / learning

The spoiler thing is a way of tagging text on reddit, by surrounding your comment with a special syntax. This is something I saw elsewhere and copied, but through this thread I think I have a good understanding of what is going on here.

LLMs are effectively trying to ‘guess’ the most appropriate response. One downside to this approach is that LLMs have a hard time learning in the moment, unless specifically taught. Something like “Add spoiler tags” is very hard for them to generate, because their corpus of data doesn’t have the right information for them to understand the question. They cannot ‘think’ about the problem without assistance. They cannot ‘mimic’ an answer they have not seen before.

This can be fixed mind you. The model can be retrained or the prompt changed to provide the bot enough context to understand. But, it would require a training iteration. Things like this can work effectively as a capcha.

Takeaway: A good way to verify if someone is human is to ask them to do a novel task, Ideally one that involves a specific, unique output syntax.

Rule 2: LLMs are bound by their prompts, which can cause them to try and ‘snap’ a conversation to the narrative.

As you can see in my first response (which I edited after the bot refused to play ball), I was immediately suspicious of this response. Actually that is an understatement. I read the post and got up worried and locked my doors.

The response is just… not natural at all. It is broken up into sections, like someone has a thesis and is laying out each argument individually. Go ask ChatGPT to explain something to you and compare the responses.

Takeaway: Sometimes, you can identify LLM content when the same user responds with very different writing styles or unusually well structured text. This will be most noticeable when you are talking close to something it has been prompted to do – like argue that RLA discrepancies are normal

Rule 3: LLMs struggle to understand the ‘importance’ of a topic when there are several topics listed.

I have autism and one fun part of that, mentally, is that my mind structures data very logically with facts and relationships. A side-effect is that it causes me to bring up related but random ancillary topics which may or may not be important to the core discussion.

In this post, I was mostly talking about the audit timelines, but I also pointed out a few things about the provisional ballots and a funny conspiracy I had about why Kamala is in Hawaii. The bots had a real struggle separating the tone and importance of those 3 separate topics, so it just tries to argue with me about all of them at once. Like, ok yeah I did say that maybe Kamala picked Hawaii because it was the home of the birther movement. Weird of you to argue with me about it though! It isn’t exactly a load bearing part of my thesis.

Takeaway: You can probably confuse bots by adding placebo arguments or other secondary, unimportant information to your discussion, or interweaving a conversation about multiple topics. LLMs will struggle to keep track.

Rule 4: LLMs have Alzheimers

This is less shown here but probably a good thing to add. For anyone who has messed around on things like CharacterAI, you likely noticed that LLMs have pretty bad memory. Trying not to get too technical, the limitation of LLMs is the amount of context they can store at once. The more context you want to include, the more cost. This is an exponential relationship. Hence, most LLMs can only handle a pretty limited context (10-20 messages-ish) and will struggle to remember pieces of information beyond that.

Takeaway: One way to identify a suspected bot is to have a prolonged conversation with them. Eventually, the bot will start to struggle with the context and you will start to feel like you are talking to someone with Alzheimers – “I just told you that five minutes ago!”

Ways to fight back

Another thing to remember about LLMs is that they are expensive to run and the cost goes up if you ask them to do more things. I’ll repeat something I often joke about with websites…

“If you really hate a site, you should click on their ads every time you see them. They have to pay money every time you do that”

Like scam calls, a good way to disrupt operations is to continually engage with them, forcing them to waste resources responding. The more context and complication the better.

I’m a Software Engineer and…

Thanks for telling me about APIs u/au-specious! But bro, I was on the Google API style committee and I contributed to Reddit’s API. I know about mah Json.

When I published that Reddit thread, I used the spoiler tags since I saw others doing it, and doing so had nearly zero cost. But I was incredibly skeptical. Because of course they can read the API! The bots can still see the text, they are almost certainly not parsing screenshots! I mean, they could be… but that would be doing things the crazy hard way.

It was bugging me for a while, but through these interactions I think I have it. It’s not about the reading side – and indeed, the bots replied to at least one of my spoiler tagged comments. It’s about the writing side – the bots cannot consistently understand the prompt and output text with the tags. Again – this is very fixable – but it will work for now.

I prompted ChatGPT to write Spoiler tags and it Worked though?

First off, none of the above methods are going to be fool-proof. But if you prompted ChatGPT with your own text, it is actually you who solved the captcha, not ChatGPT.

Beyond that, it is important to understand that LLM bots online do not operate like a human using ChatGPT. To ELI5, when you use an LLM, you are providing it two types of input:

Rules for how to act (static)

Context for a specific request (dynamic)

When one writes an LLM bot, they generally want it to do a very specific thing. Most of the detection methodology above hinges on that. While LLM’s can pretty convincingly mimic a human, that is not what you want in a bot! You don’t want a person to talk to, you want a propaganda figurehead. So it is more akin to how a company spokesperson or politician will stick to their lines and try not to engage too far off topic. This is a very exploitable flaw as it provides us a method of understanding when the engagement is not ‘genuine’.

Disagree?

This is a thesis. If you disagree, tell me where I am wrong! There is a lot here and I’m biasing for timeliness over exhaustiveness, so I am likely to have missed some things, misrepresented some things, or gotten some facts wrong. Call me out. When I post a design, I always assume that I messed something up. So counter-intuitively, it really bugs me when nobody will tell me why/where I’m wrong.

There is a lot going on in the news cycles and a lot of disinformation flying around. I’ve tried my hardest to ground these posts in fact and data, but I’m a bit out of my domain here and it is too much to keep track of. The best designs are always built through a consensus of peers.

I will add, I have used the spoiler captcha challenge on ~10-15 suspected bots now, and only 1 has responded with an actual spoiler tag. But upon reviewing their recent comment sentiment, etc, they seem very likely not a bot. Scared the shit outta me though.